AI Pub Dev: An MLOps tool

with web user interface

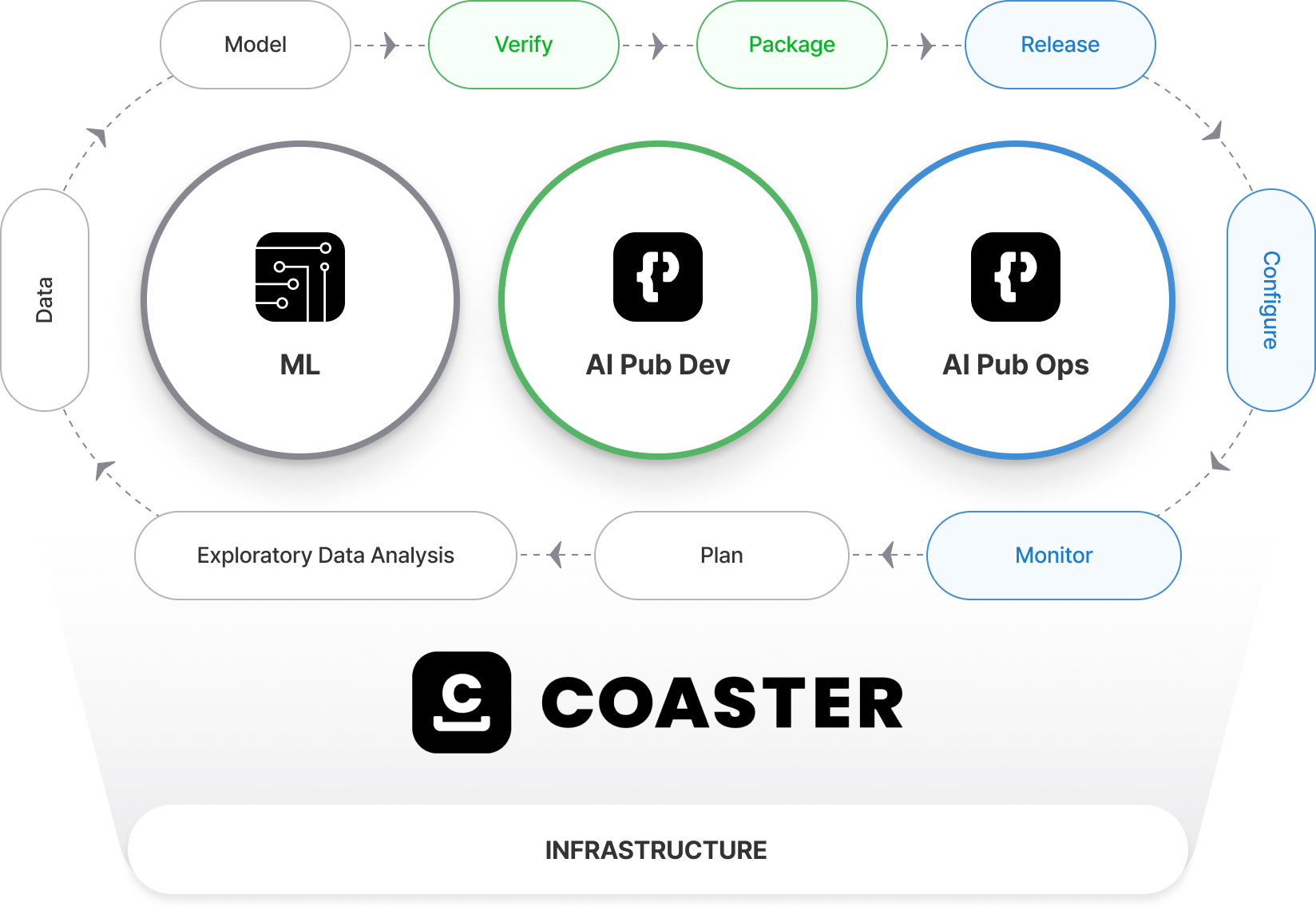

AI Pub Dev is built on Coaster, a container platform, designed to

support efficient management of GPU infrastructure resources for your AI development and training.

Coaster, AI Pub Dev, and AI Pub Ops

enable you to create value throughout the MLOps lifecycle.

They facilitate an efficient process for AI development and operation, making it easier to achieve your goals.

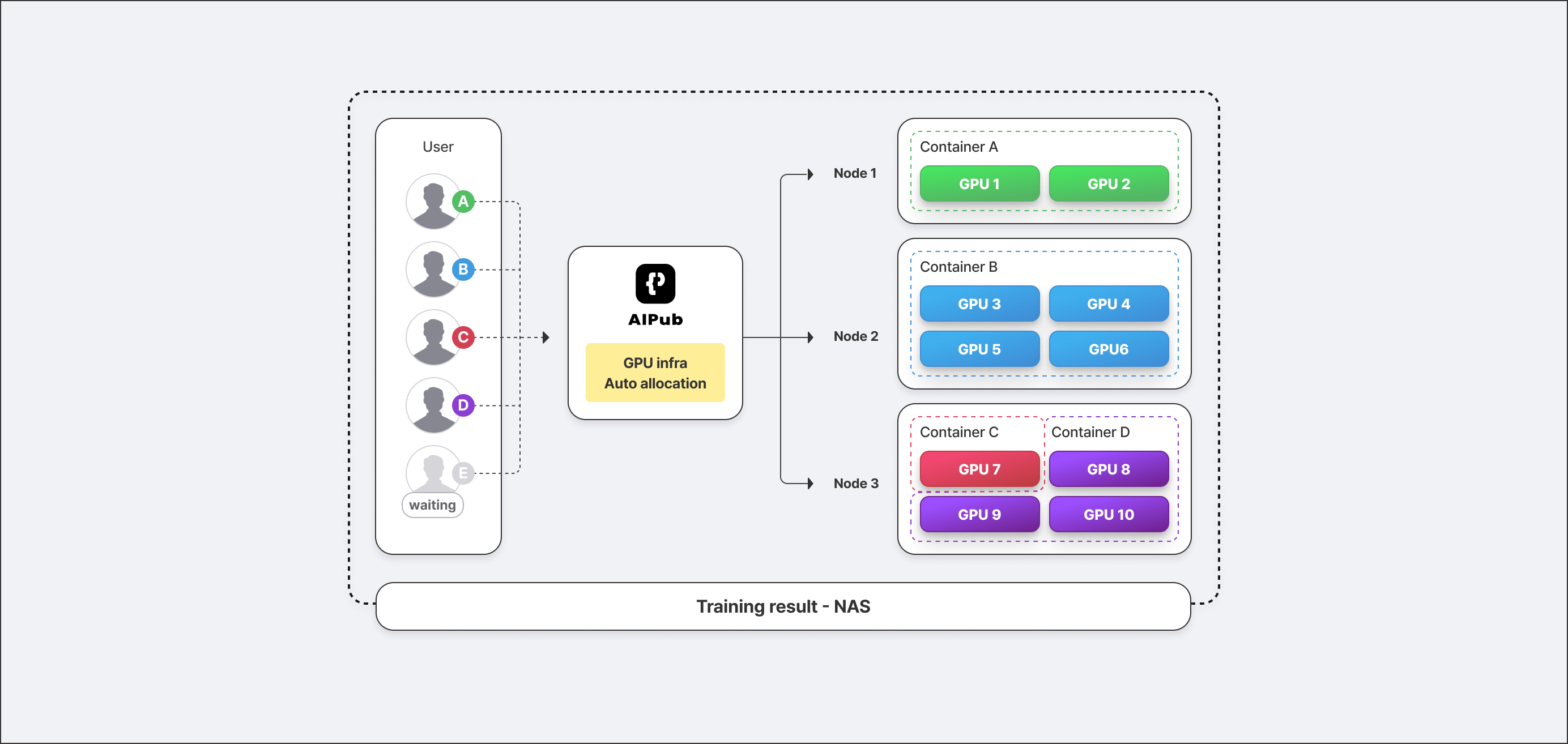

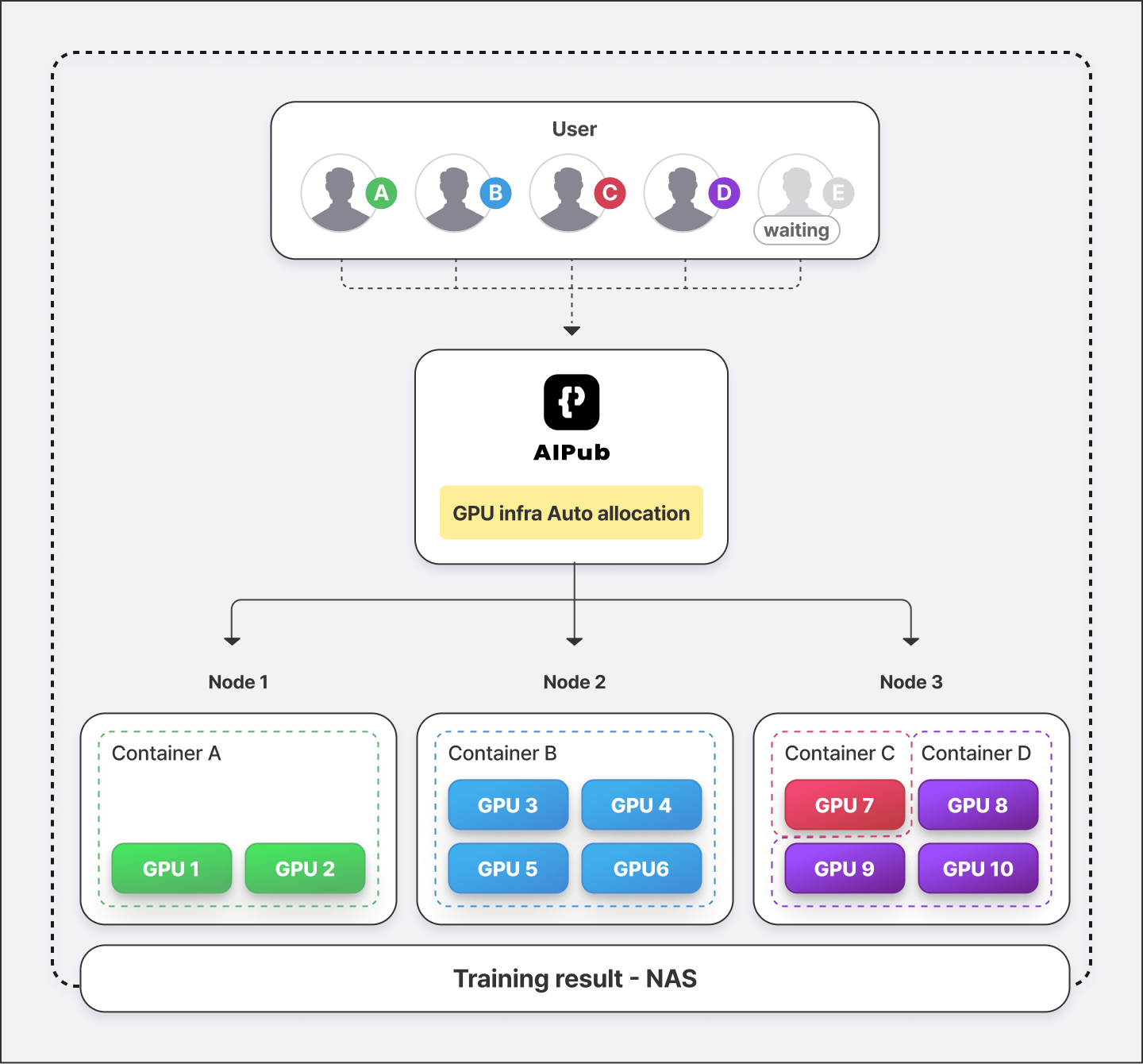

AI Pub Dev is a resource management tool designed for AI development and training.

It enables the allocation of limited AI infrastructure to multiple AI developers, facilitating their efficient use of the allotted GPU infrastructure resources for their specific tasks.

AI Pub Dev also allows the administrator to manage the GPU infrastructure resources according to various infrastructure patterns.

Discover AI Pub Dev’s functions

With Coaster at its core, AI Pub Dev offers fully-managed services for model training as well as resource and workload management.

Manages the user’s development environment via Docker images

Creates workspaces using development images

Facilitates integration with Jupyter Notebook and TensorBoard

Automatically allocates necessary resources for each AI training

Enables applications for GPU and CPU resources

Restricts resource usage per user account

Withdraws idle resources

Manage workspace of each node & Set MIG for each node

Monitors the entire infrastructure

(for manager) Create resource group and Set user’s authorization

Edit resource group

Manages suspension/resumption of schedulers

Oversees job scheduling and prioritization

Manages resource usage history for each user account

Downloads usage history

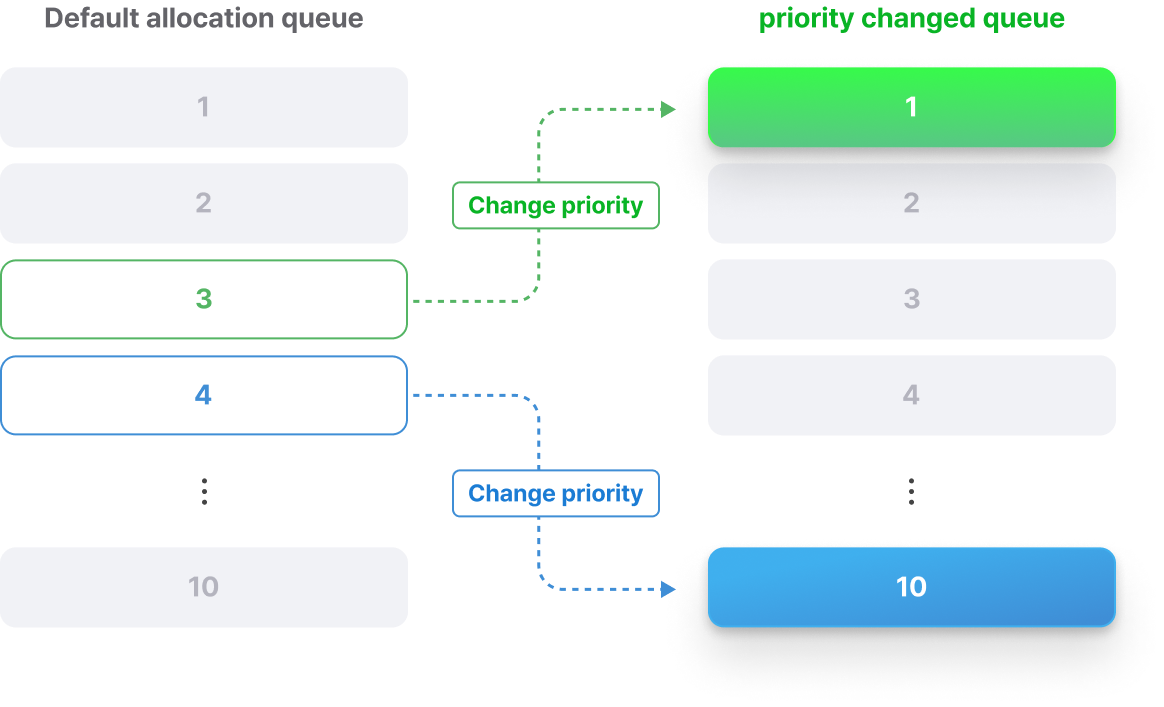

Determine resource

allocation priorities with ease

Have you ever struggled with deciding

on the priorities for allocating GPU infrastructure resources?

With AI Pub Dev, you can easily manage and adjust the order of the resource allocation queue.

The scheduler on/off function also allows you to respond flexibly

to any changes that may occur.

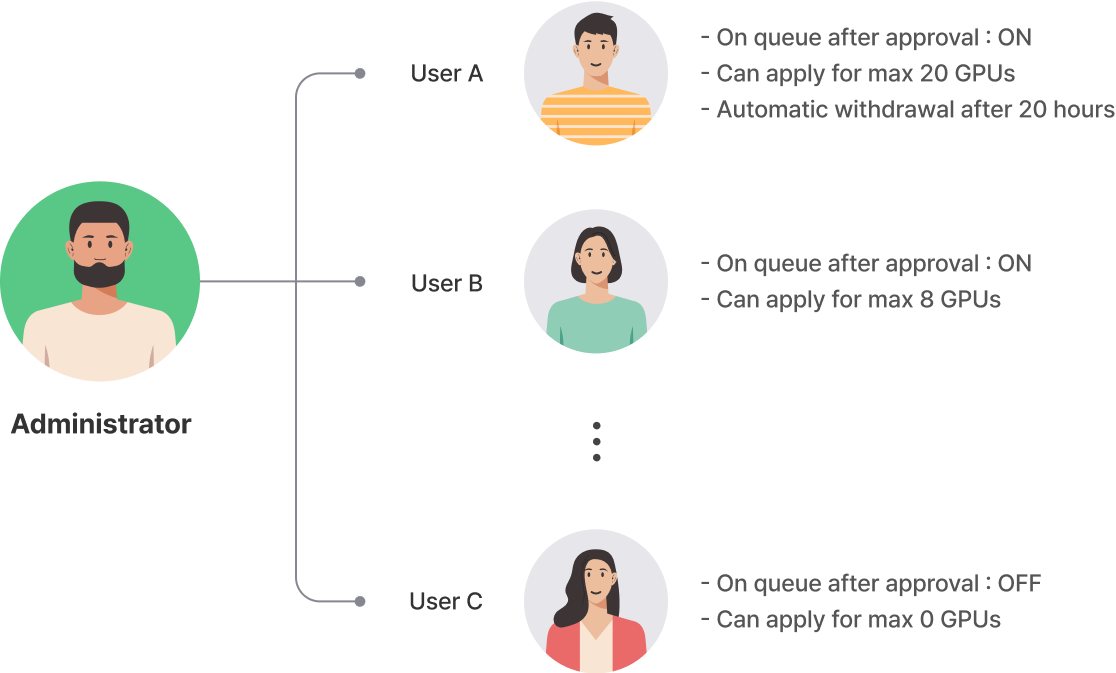

Fragment MIG into the smallest units

for efficient resource management

AI Pub Dev enables you to fragment MIG into the smallest units,

ranging from 1 to 7 fragments per GPU, ensuring optimal resource management.

You can also set resource application limit policies for each user account.

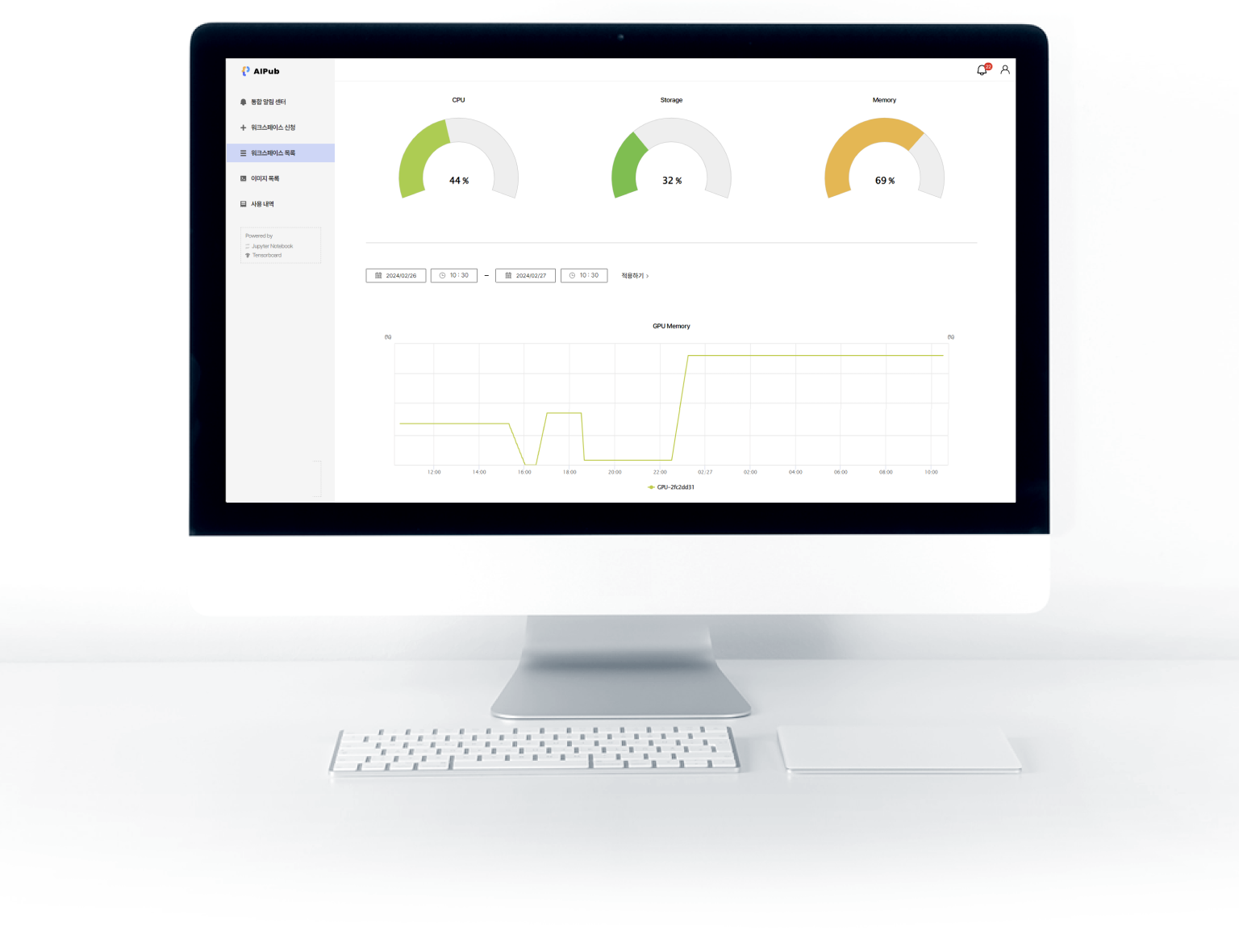

Monitor the status and operation rate of GPU resources real time

Discover whether you are utilizing your GPU resources efficiently.

Monitor real-time resource status and operation rates for each cluster, node, and project.

Additionally, you can track the resource usage amount and utilization rate for each account.

See a demo of AI Pub Dev’s functions.

Visit TEN’s YouTube channel to see demonstrations showing AI Pub Dev’s functions.

Ready to find out more about AI Pub?

Reach out with your questions about AI Pub.

We are here to assist you on your journey of AI development and operation.